Durational reduction in English (and Polish) words shows a contextual frequency effect

APAP 2019

Kamil Kaźmierski, WA at AMU in Poznań

June 21st, 2019

Overall frequency effect

Higher lexical frequency → Shorter duration (e.g. Jurafsky et al. 2001)

Perhaps caused by speakers: frequency of use causes articulatory reduction

Perhaps moderated by listeners' expectations: highly frequent forms don't have to be pronounced carefully

Such reduction could result from online computation, performed on abstract phonological representations (cf. Levelt et al. 1999)

Contextual frequency effect on variation

| Language | Effect | Source |

|---|---|---|

| English | Prevocalic word-final /t/ glottalized more in words typically followed by consonants | Eddington & Channer (2010) |

| English | Word-final /t,d/ deletion more likely in words typically followed by consonants | Raymond et al. (2016) |

| English | Unstressed ING more likely to be /ɪn/ in words frequently occurring in /ɪn/ favoring contexts | Forrest (2017) |

| Spanish | Latin /fV-/ words frequently occurring after word-final non-high vowels likely to be |

Brown & Raymond (2012) |

| New Mexican Spanish | Word-initial /s/ more likely to be reduced ([s] › [h] › [Ø]) in words often preceded by word-final non-high vowels | Raymond & Brown (2012) |

| English | Words typically predictable reduce in duration more | Seyfarth (2014) |

A table formatted this way will look OK

html 'entities' taken from here: https://onlineutf8tools.com/convert-utf8-to-html-entities

Predictability and Informativity

Predictability

How likely is the current word given the word the speaker said right before it?

Transitional/conditional probability (cf. Jurafsky et al. 2001): P(wi|wi−1)=C(wi−1wi)C(wi−1)

One problem: bigrams with 0 occurrences in the corpus

A solution: add 1 to each bigram count

A better solution: Modified Kneser-Ney smoothing (cf. Chen & Goodman 1998) (r-cmscu R package)

High vs. low predictability

nice home

Lower (< 0.001) predictability of home given nice

fortress-like home

Higher (0.412) predictability of home given fortress-like

Higher predictability → More reduction

nice home

Lower predictability of home given nice

→ Less reduction in home

fortress-like home

Higher predictability of home given fortress-like

→ More reduction in home

Higher predictability → More reduction

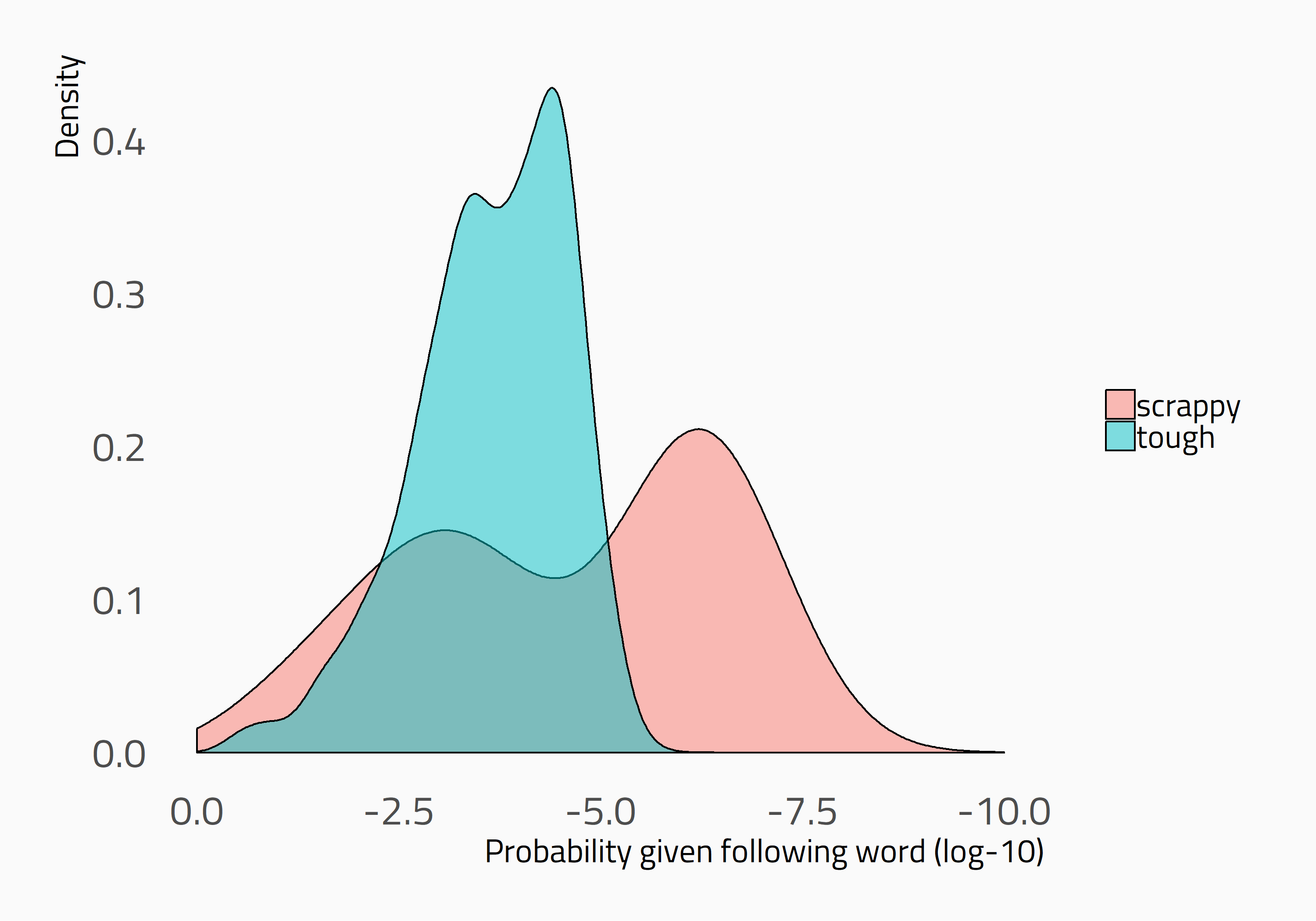

Right-context predictability: How likely is this word, given the word the speaker is about to say next?

home wrecker

Higher predictability of home given wrecker

→ More reduction in home

home course

Lower predictability home given course

→ Less reduction in home

Two different predictability "profiles"

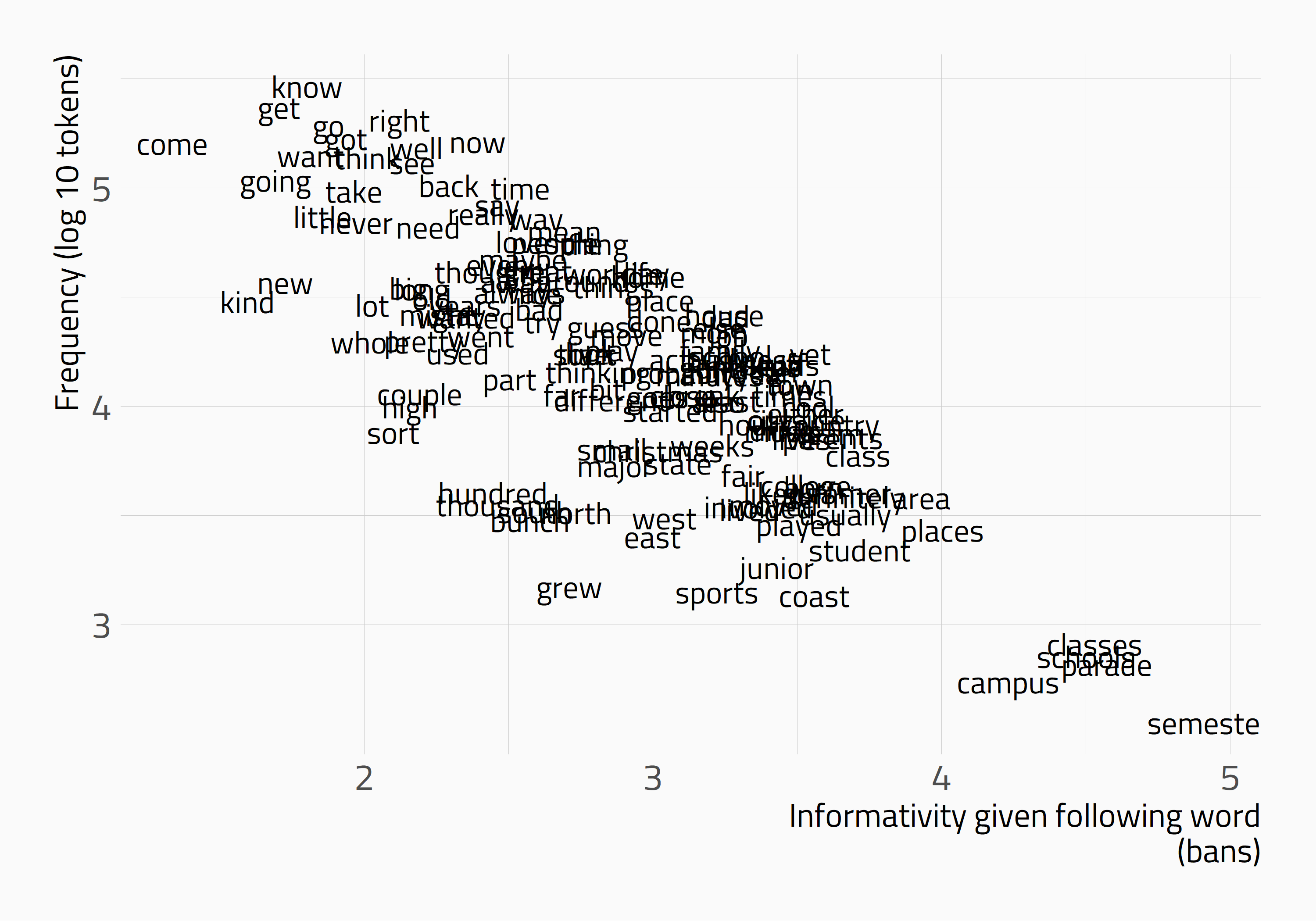

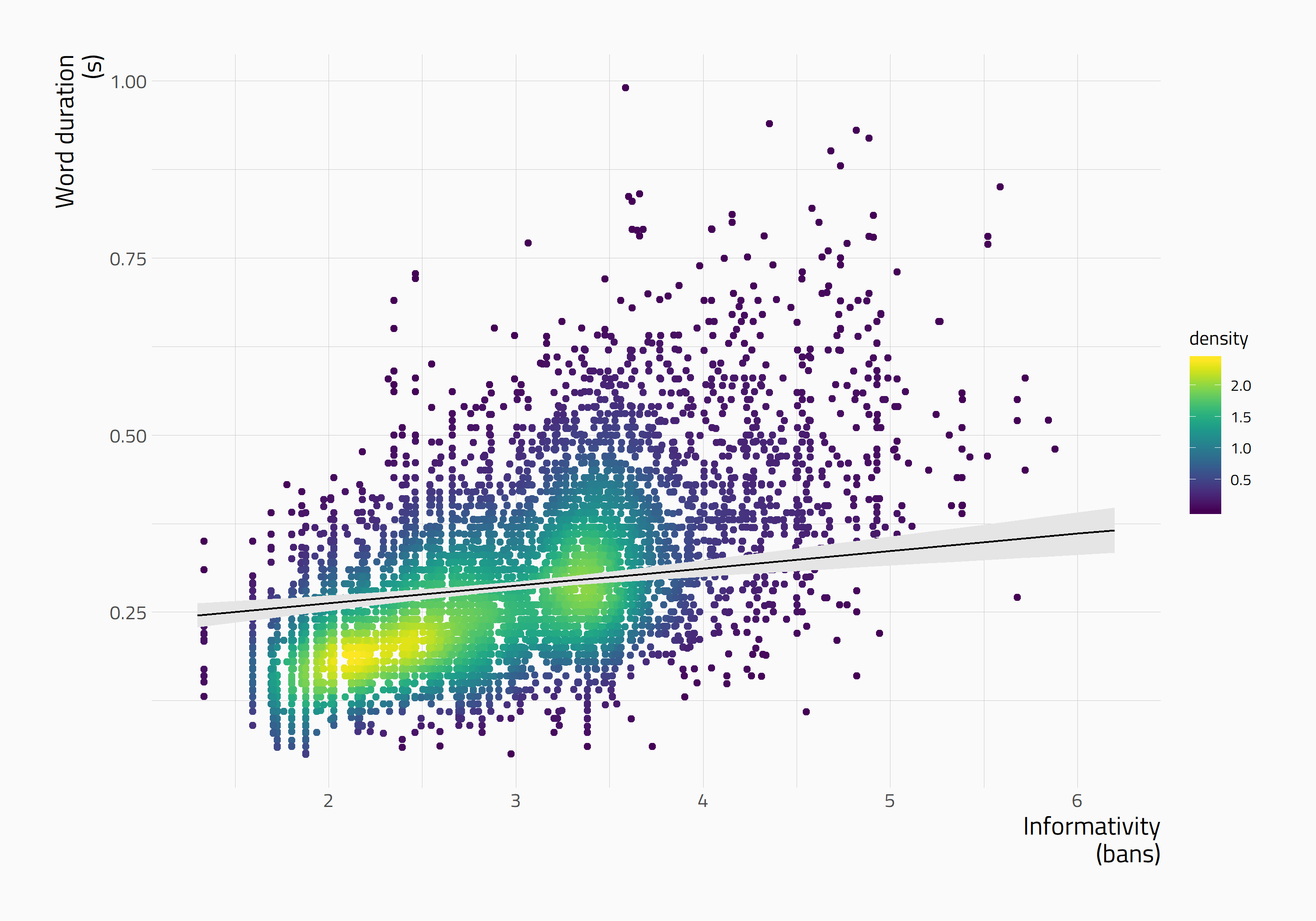

Informativity :: How unpredictable from its context is this word on average?

Calculating Informativity

P(W=w|C=ci)

Take Kneser-Ney smoothed probability of a particular word token in a particular context

Calculating Informativity

logP(W=w|C=ci)

Seyfarth used bans (base 10 logarithm) as is apparently usual, I used natural logarithm (R's default) in nsp, corrected it to bans for gpsc

Calculating Informativity

N∑i=1logP(W=w|C=ci)

Calculating Informativity

1NN∑i=1logP(W=w|C=ci)

Calculating Informativity

−1NN∑i=1logP(W=w|C=ci)

Steven T. Piantadosi, Harry Tily, & Edward Gibson 2011. "Word lengths are optimized for efficient communicationTitle", Proceedings of the National Academy of Sciences 108(9), 3526-3529.

Informativity vs. Frequency

Predictability vs. Informativity

Word frequently occurs in low-predictability contexts → high informativity

Predictability vs. Informativity

Word frequently occurs in low-predictability contexts → high informativity

Word frequently occurs in high-predictability contexts → low informativity

Target study: Seyfarth (2014)

Durational reduction in Buckeye (Pitt et al. 2007) and Switchboard-1 Release 2 (Calhoun et al. 2009; Godfrey & Holliman 1997)

Predictability and informativity estimateted from Fisher Part 2 corpus (Cieri et al. 2005)

Findings:

Higher left-context and right-context predictability → More reduction

Higher right-context (both corpora) or left-context (Switchboard) informativity → Less reduction

Implications:

Predictability and reduction: could be an online effect

Informativity and reduction: suggests storage of reduced forms

Research questions

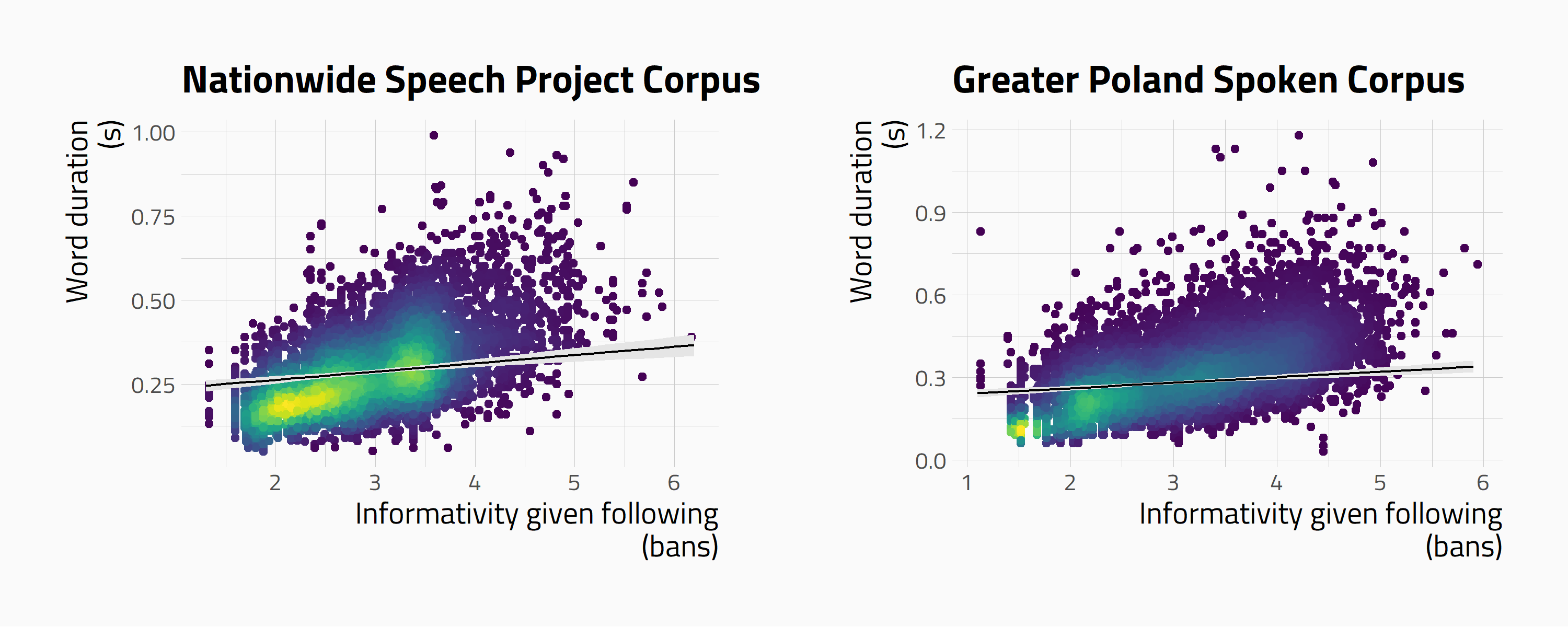

RQ1: Do the results of Seyfarth (2014) replicate on another English dataset?

Research questions

RQ1: Do the results of Seyfarth (2014) replicate on another English dataset?

RQ2: Do these effects generalize to Polish?

Method

Model architecture (N = 7,158)

- Response:

Word duration

Model architecture (N = 7,158)

- Response:

Word duration

- Predictors of theoretical interest:

Predictability given previous,Informativity given previous,Predictability given following,Informativity given following

Model architecture (N = 7,158)

- Response:

Word duration

- Predictors of theoretical interest:

Predictability given previous,Informativity given previous,Predictability given following,Informativity given following

- "Control" predictors:

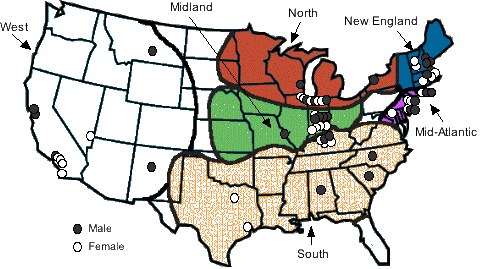

Part of speech,Orthographic length,No. of syllables,Dialect,Average speaking rate,Rate deviation

Model architecture (N = 7,158)

- Response:

Word duration

- Predictors of theoretical interest:

Predictability given previous,Informativity given previous,Predictability given following,Informativity given following

- "Control" predictors:

Part of speech,Orthographic length,No. of syllables,Dialect,Average speaking rate,Rate deviation

- Random terms:

(1|Word),(1 + Informativity given following + Informativity given previous | Speaker)

Results

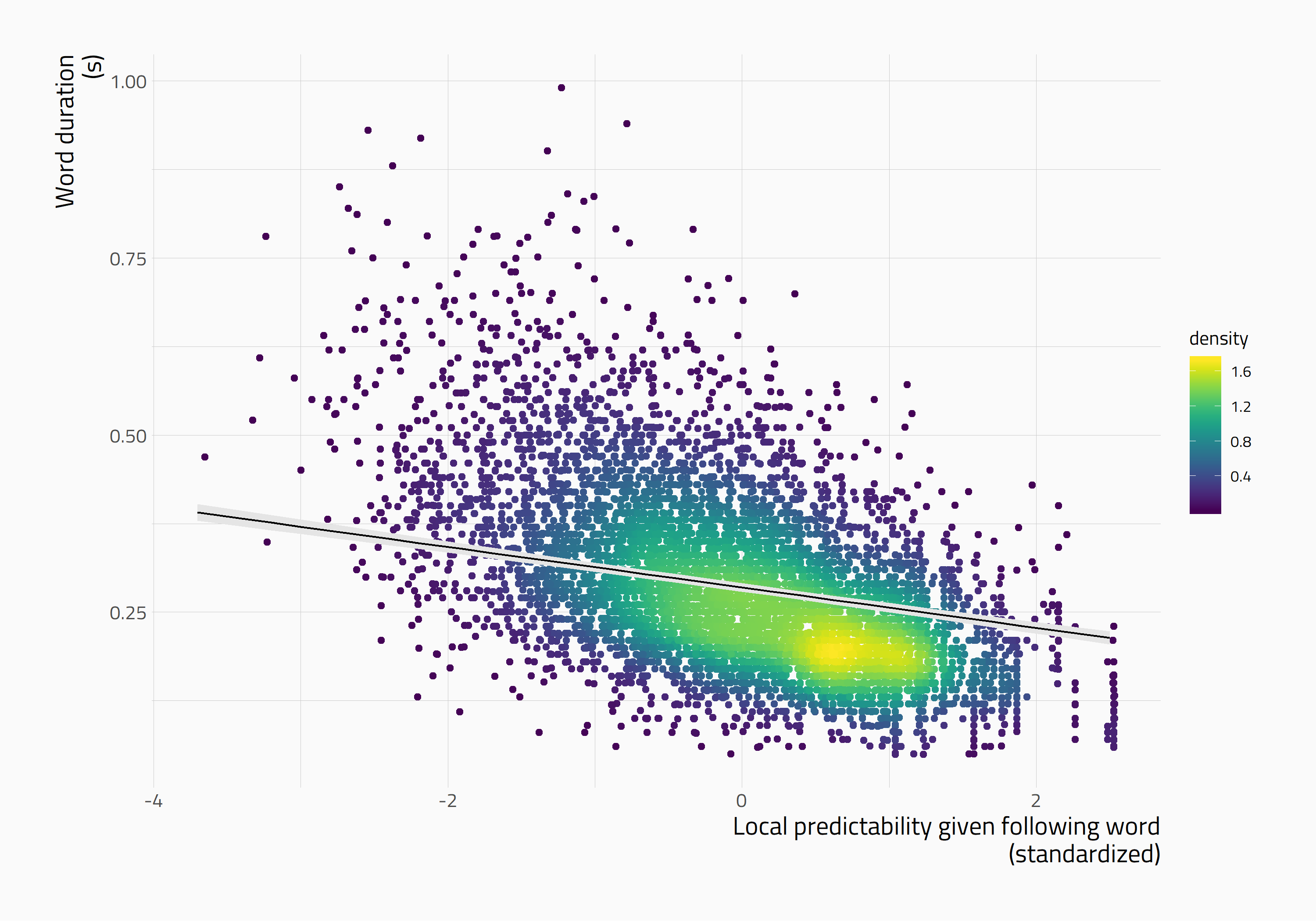

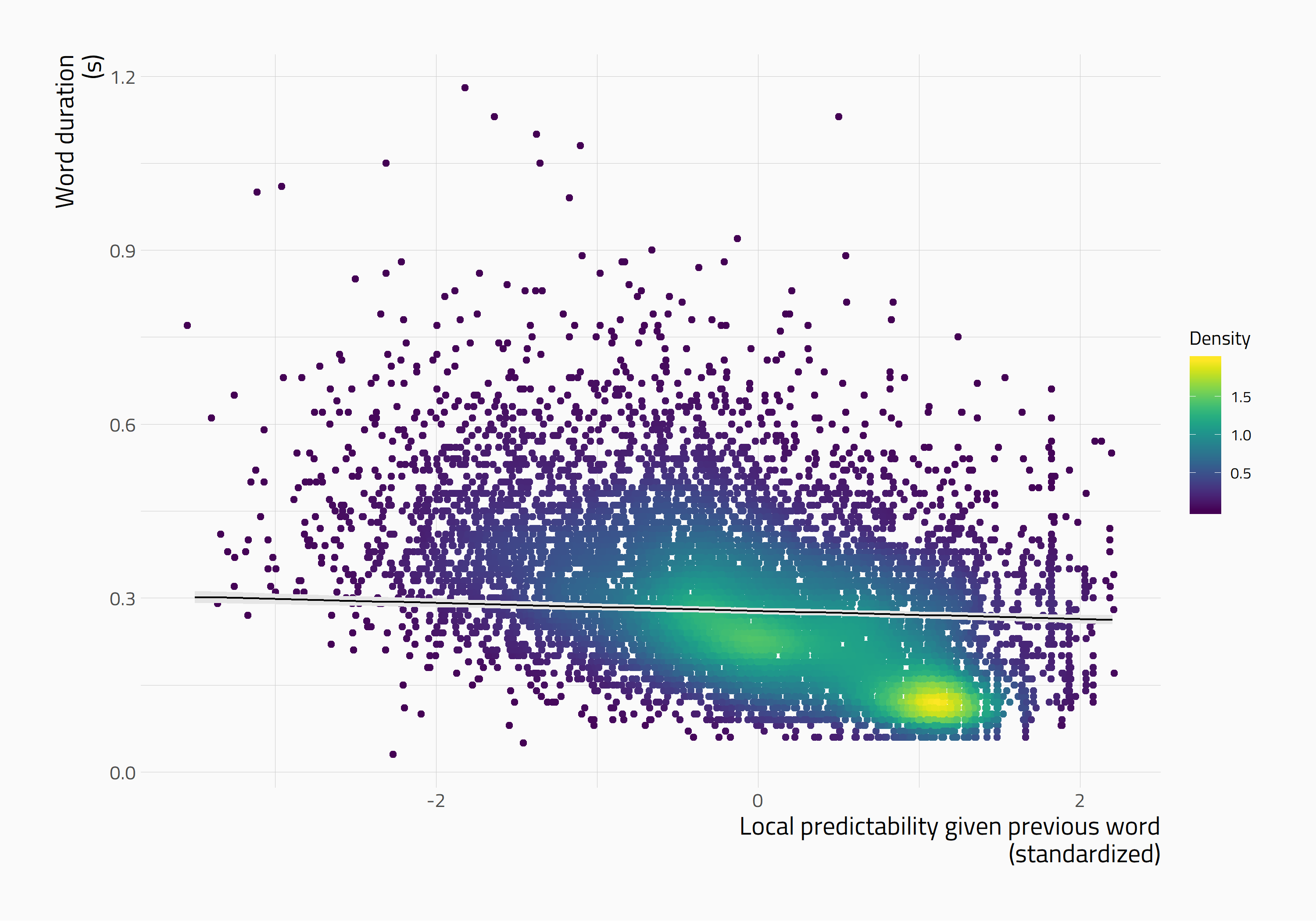

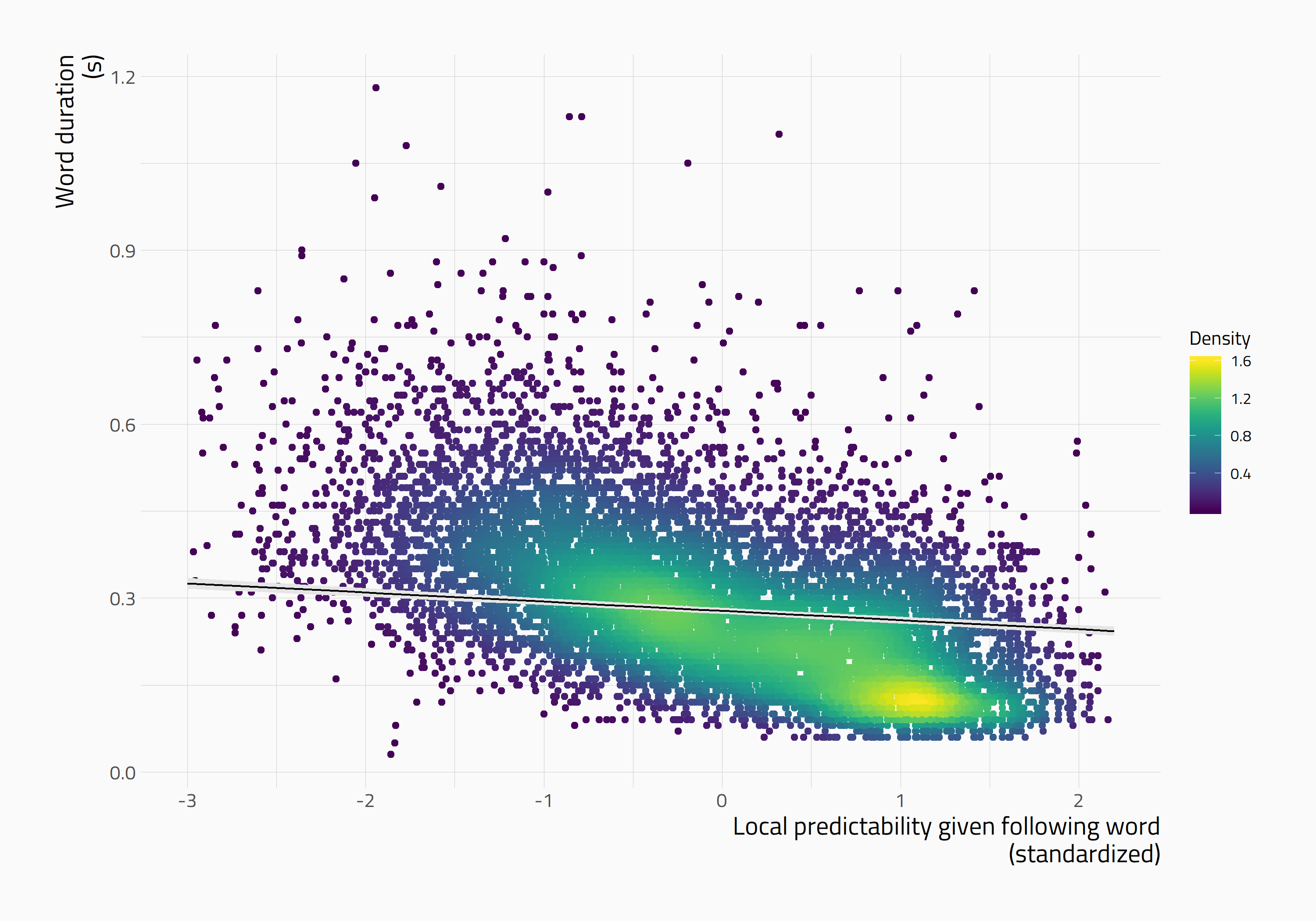

Predictability given following

β = -0.029, p < 0.001

Informativity given following

β = 0.025, p < 0.001

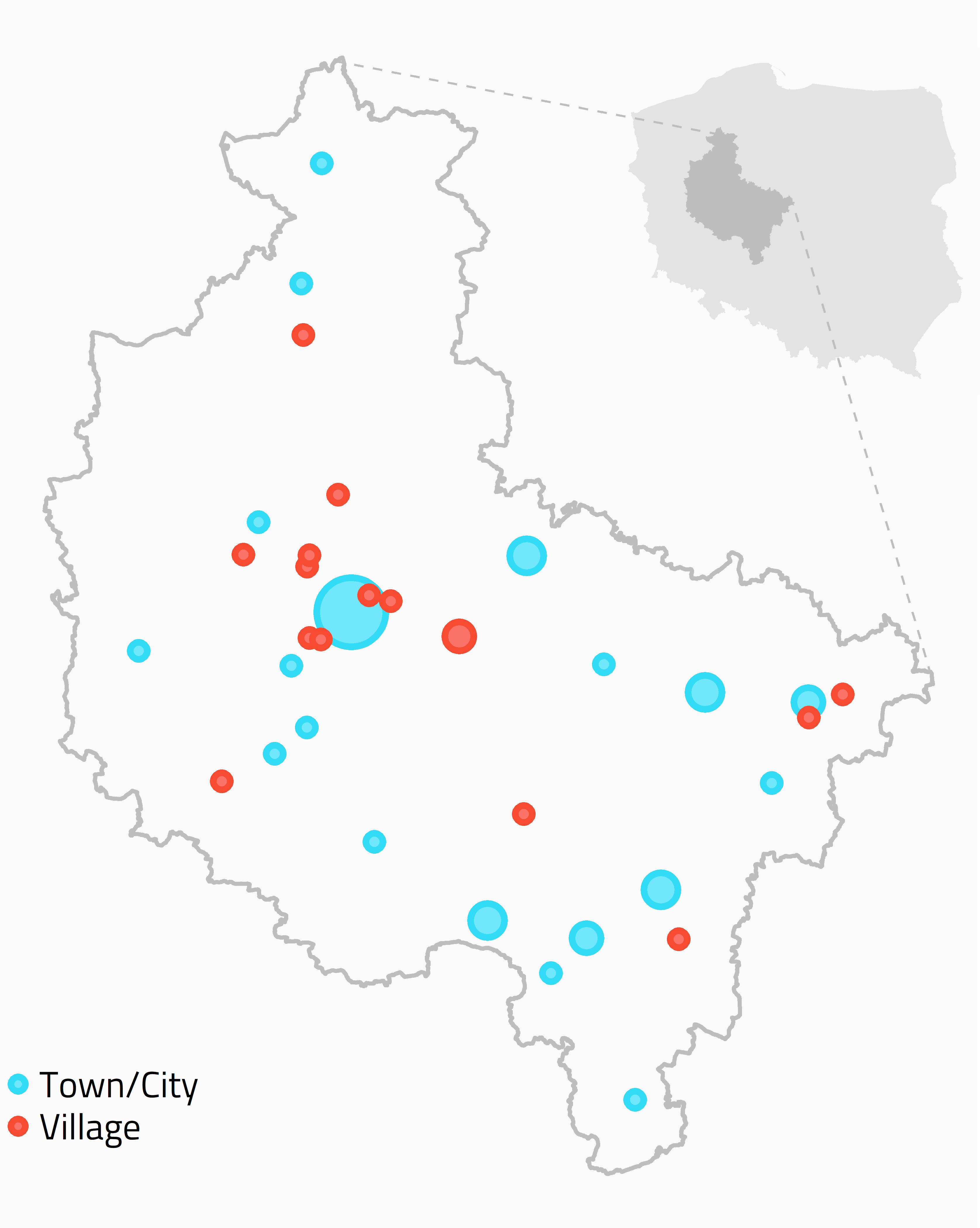

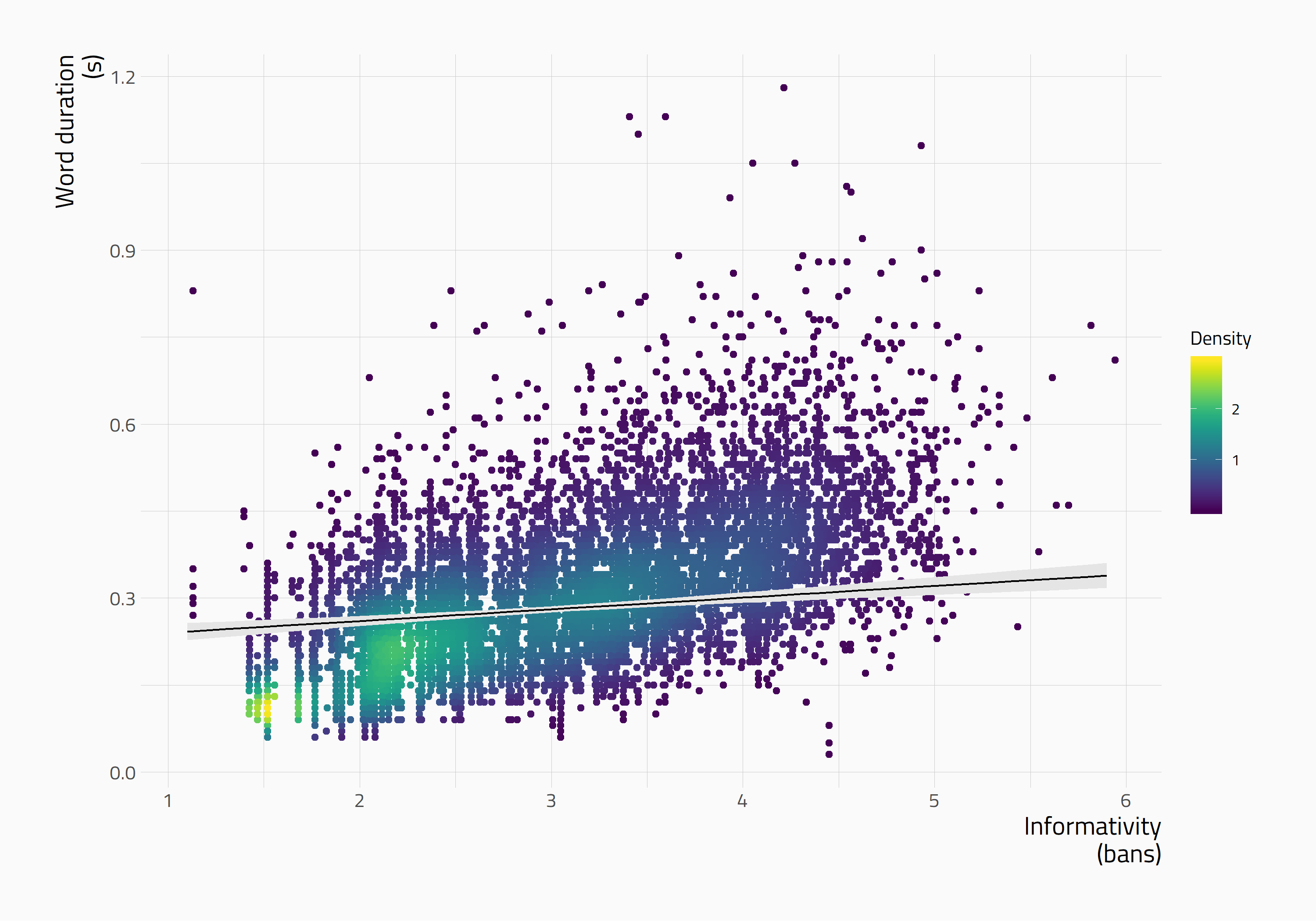

Source of Polish data (N = 10,588)

Predictability given previous

β = -0.007, p < 0.001

Predictability given following

β = -0.016, p < 0.001

Informativity given following

β = 0.02, p < 0.001

Summary of results

RQ1: Do the results of Seyfarth (2014) replicate on another English dataset?

✓ Yes

Differences: predictability given previous; informativity given previous (Switchboard)

Summary of results

RQ2: Do these effects generalize to Polish?

✓ Yes

local predictability given both previous and following word, as well as informativity given following word are significant predictors of word duration in Polish words

Conclusions

→ The effect of local predictability is stronger for right-hand context than left-hand context

→ On top of local predictability, both English and Polish show the effect of informativity

→ The latter effect suggests phonological storage of reduced forms

Thank you!

Right-context informativity influences word durations in English and in Polish

kamil.kazmierski@wa.amu.edu.pl

Model summary: English

| Term | Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|---|

| 1 | (Intercept) | 0.256 | 0.015 | 17.131 | 0 |

| 2 | local_pred_given_prev_sd | -0.001 | 0.001 | -0.952 | 0.341 |

| 3 | inf_given_prev | 0.002 | 0.005 | 0.444 | 0.657 |

| 4 | local_pred_given_foll_sd | -0.029 | 0.001 | -20.253 | 0 |

| 5 | inf_given_foll | 0.025 | 0.005 | 5.004 | 0 |

| 6 | posAdjective | -0.013 | 0.008 | -1.745 | 0.081 |

| 7 | posAdverb | -0.036 | 0.008 | -4.683 | 0 |

| 8 | posVerb | -0.031 | 0.006 | -5.486 | 0 |

Model summary: Polish

| Term | Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|---|

| 1 | (Intercept) | 0.251 | 0.012 | 20.612 | 0 |

| 2 | pred_giv_prev_sd | -0.007 | 0.001 | -5.299 | 0 |

| 3 | inf_giv_prev | -0.007 | 0.004 | -1.881 | 0.06 |

| 4 | pred_giv_foll_sd | -0.016 | 0.001 | -12.281 | 0 |

| 5 | inf_giv_foll | 0.02 | 0.004 | 5.507 | 0 |

| 6 | posadj | -0.001 | 0.006 | -0.112 | 0.911 |

| 7 | posadv | -0.001 | 0.007 | -0.132 | 0.895 |

| 8 | posverb | -0.02 | 0.005 | -4.349 | 0 |